Kompuutaa (which is 'computer' translated into the Japanese syllabary then back into the Roman alphabet) is a Beowulf cluster, consisting of 24 dual-processor PentiumII machines (48 processors total) linked by fast ethernet, running Linux and communicating via MPI. It is being used for simulations of convection inside the Earth, and (sometimes) for simulations of seismic wave propagation. It resides in the Earth and Space Sciences Department at the UCLA, and has been built up over a period of time, starting in the summer of 1998, reaching a 30-processor configuration in November 1998 and the present 48-processor configuration in August 1999.

The entire system was built from components, not by buying ready-made PCs. Prices of most major components have dropped by a factor of at least 2 since the start of the project, and CPUs have become much faster. A 48-processor configuration could now be built from components for a price of ~$30-40,000, giving fantastic performance for only the cost of a high-end Unix workstation!

Such clusters are now becoming widespread and there is nothing particularly unusual about this one but I give some hardware/software specifications, design issues, and performance tests here which may be useful for anyone out there thinking about building their own. There are also some instructions for usage and instructions for using the PBS queueing system.

The conventional wisdom is that dual-processor (SMP) units do not work well because the processors are competing for the same memory bus (and in this case, the same ethernet channel), so you don't get twice the speed. However, tests indicate that the applications we want to run do get good speedup on dual-CPU boards (some numbers later...of course, you don't get twice the speed by using 2 processors in different boxes, either). Other applications may be different, but here are some arguments in favor of using dual-CPU boards:

PCs using the DEC alpha chip look very promising in principle and we do indeed get faster performance (see later). However, despite the very high theoretical peak speed of the alpha CPU, typical applications only realize a small fraction of this. In addition, the price of PII systems has gone down greatly in the last year whereas alphas have not gone down very much, such that a dual-PII system now costs less than a single-alpha system. The test given later shows that for the applications of interest to us, PentiumII CPUs give a better price:performance ratio.

This is the size of the fast ethernet switch. 24-way fast ethernet switches can be obtained for a little more than $1000. Larger ones are much more expensive, e.g., $5000 for a 36-way switch.

Building from components generally saves a lot of money, and allows you to get the exact specification desired. It takes about 1 hour to assemble each PC. It is not necessary to go through the Linux installation process for each PC since system disks can be easily 'cloned' using the 'dd' command, as described on Caltech's website.

We have had some minor problems.

(i) We tried 2 other types of motherboard and had couldn't get

them to work properly (which isn't to say that they absolutely

can't be made to work, but we gave up). In particular on a Soyo

6BI?? motherboard the BIOS would not recognize the boot partition

on an existing system disk, didn't seem to allow installation

of the boot loader from the RedHat Linux installer, and even refused

to boot without a keyboard attached. By contrast, the ASUS P2B-D

motherboards have worked perfectly with no problem every time,

26 of them so far.

(ii) The PCI ATA/33 IDE controller card did not work with Linux

kernel 2.0.34 (which came with Red Hat 5.1), but it was a simple

matter to download and install a more recent kernel (2.0.37),

from ftp.kernel.org. However, an ATA/66 controller we have does

not work even with kernel 2.2.5 (Red Hat 6.0).

(iii) A 37 GB IDE disk (by IBM) could not be recognized by the

motherboard's BIOS, and has to be treated as 32GB. The ATA/33

controller card can also only detect it as 32 GB- this is why

we were trying an ATA/66 controller. Future upgrades to BIOS and/or

Linux will no doubt fix this in the near future.

(iv) The Linux Disk Druid utility had problems with a large IDE

(16GB) disk- it couldn't recognize the proper number of cylinders,

and tried to treat it as only 8Gb. This was got around (for that

system disk) by using fdisk to set the correct numbers. For data

disks it is not necessary to set up partitions - just connect

the disk, do mkfs followed by mount, and it has worked every time

and recognized the correct disk size.

(v) The 'tulip' ethernet driver we were using, which used to work,

did not work with the latest (D1) version of the Netgear FA310TX

ethernet adaptors. Downloading and installing the latest tulip

driver from CESDIS fixed that.

A UCLA electrician recently measured the current usage of the cluster as 13 Amps, corresponding to 1430 Watts. The cluster fills a small office with air conditioning on full blast and it is usually cold in there.

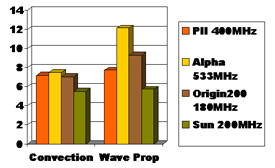

These are some test I did in summer 1998. Of course, faster processors of all these types are now available, but this comparison, in which the PII holds its own against much more expensive CPUs, was influencial in choosing the PentiumII architecture. Two different codes were tested, a finite-volume convection simulation code (left 4 bars) and a finite-difference wave propagation code (right 4 bars). Height of bars is proportional to speed (higher is better).

3 applications have been tested: two different 3-D convection codes, and a 3-D seismic wave propagation code. One of these doesn't scale too well, while the other 2 scale well with the right parameters.

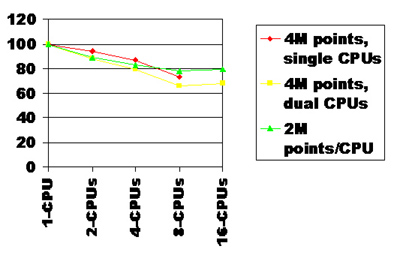

Code 1: 3D Finite-difference elastic wave propagation

The scaling is tested either with a fixed problem size (4 million grid points) or a problem size proportional to the number of nodes (2 million points/CPU). Another comparison is to use either 1 CPU per box (with the other one sitting idle), or both CPUs per box (i.e., the SMP issue). Code compiled using g77. Plotted is parallel EFFICIENCY (ie, % of ideal speedup).

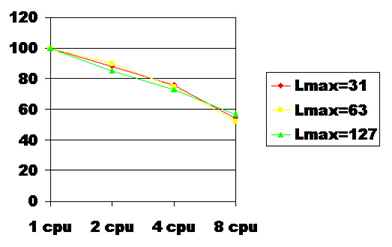

Code 2: 3D Spherical convection using a spectral transform method

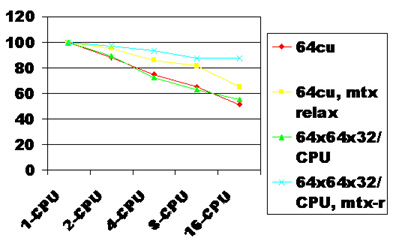

Code 3: 3D cartesian convection, multigrid, grid-based method

The efficiency tests below compare these 2 methods, as well as fixed vs. scaled problem size.